Imagine waking up to a video of Taylor Swift telling you she’s giving away $10,000 to the first 100 people who click a link. You’re not alone. Thousands of people did exactly that in 2025-and lost their savings. These aren’t old-school phishing emails. These are hyper-realistic videos, made with AI, that look and sound exactly like the celebrity you trust. This is the new face of fraud: celebrity endorsement scams powered by deepfakes.

How Deepfakes Are Used to Trick You

Deepfake technology doesn’t just make funny memes anymore. It’s being weaponized. Criminals use AI to clone a celebrity’s voice, facial expressions, and even their signature laugh. They stitch together footage from public interviews, concerts, and social media posts. Then they drop that fake video into a WhatsApp group, Instagram ad, or YouTube short with a simple message: "This is real. Click now. Limited time." The most common scams? Fake cryptocurrency giveaways, fake investment apps, and counterfeit product launches. In one case, a deepfake of Elon Musk promoted a "Bitcoin doubling" scheme. Victims sent money to a wallet-only to find the link dead the next day. The FBI recorded over 4.2 million fraud reports since 2020, and deepfakes are now one of the fastest-growing parts of that number.Who’s Being Targeted-and Why

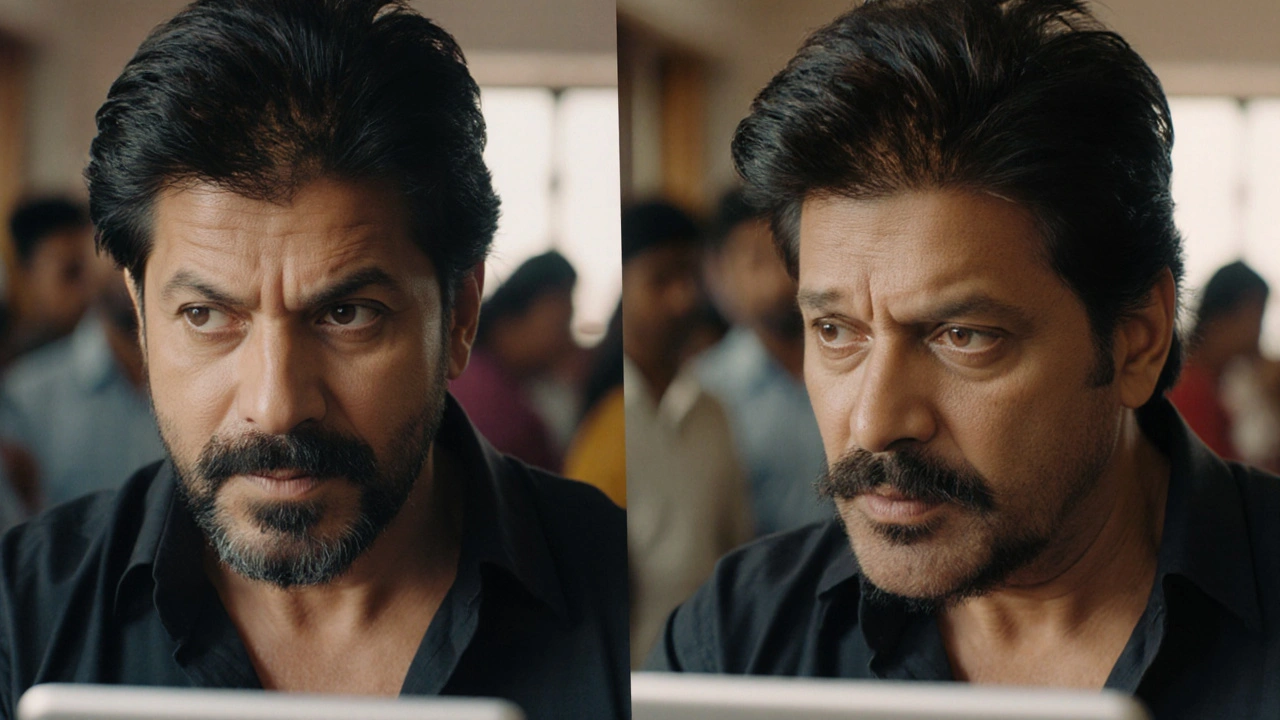

It’s not just tech-savvy people falling for this. The biggest victims? People aged 25 to 44. Why? They’re the ones scrolling social media the most, trusting influencers, and open to "exclusive" deals. McAfee’s 2025 report found that 60% of Indians in that age group clicked on fake celebrity ads. In India, where celebrity culture is massive and digital literacy uneven, 90% of people have seen AI-generated celebrity content. Shah Rukh Khan, Alia Bhatt, and even Elon Musk are top targets there. Globally, Taylor Swift is the most impersonated celebrity in scams. Why her? She has a huge fanbase, frequent public appearances, and emotional connections with millions. Scammers know: if you love her, you’ll believe she’s giving you money. And because the videos are so good, even skeptics get fooled.How to Spot a Fake Celebrity Endorsement

You don’t need to be a tech expert to tell real from fake. Here’s what to look for:- Unnatural blinking-Real people blink differently. Deepfakes often blink too little, too much, or not at all in sync with speech.

- Audio-video mismatch-If the lips don’t move exactly with the words, it’s a red flag. Even the best deepfakes struggle with perfect sync.

- Weird lighting or shadows-Look at reflections in eyes or on skin. Deepfakes often get lighting wrong, especially around the jawline or forehead.

- Too-good-to-be-true offers-No celebrity is giving away $10,000 via a random link. Ever.

- Pressure to act fast-"Only 3 spots left!" "This offer expires in 5 minutes!" That’s classic scam language.

Where These Scams Live

You won’t find these scams on official celebrity websites. They live where people don’t expect fraud:- Instagram Reels and TikTok-Short videos with catchy music and flashy text. Easy to share, hard to trace.

- WhatsApp forwards-People trust messages from friends. A fake video sent by someone you know feels real.

- YouTube Shorts-Fake celebrity channels with thousands of views, often bought with bots.

- Facebook ads-Paid ads targeting fans of specific celebrities. They look just like real sponsored posts.

What’s Being Done to Stop It

Governments and tech companies are finally catching up. India’s Ministry of Electronics and Information Technology passed the Deepfake Accountability Act, effective January 1, 2026. It requires all AI-generated content to carry a digital watermark. That means, in theory, you’ll be able to tell if a video is real or fake just by checking its metadata. Microsoft’s Video Authenticator 2.0, released in November 2025, can detect deepfakes with 98.7% accuracy in controlled tests. Banks like HDFC and ICICI in India now use AI tools to flag suspicious videos before customers act. ICICI now requires three-factor authentication for any transaction over ₹10,000 ($120) if it’s triggered by a social media ad. But the biggest effort? The Global Deepfake Registry, set to launch in Q2 2026. Led by the World Economic Forum, it will store verified biometric data of celebrities-like voiceprints and facial patterns-on a blockchain. If a video claims to be Taylor Swift, the system can check it against her real signature. No match? It’s fake.

What You Can Do Right Now

You can’t wait for governments or tech giants to fix this. Here’s what you should do today:- Never click links in unsolicited videos, even if they look real. Go to the celebrity’s official website or social profile directly.

- Verify with two sources. If you see a "new product" from a celebrity, check their verified Twitter/X account, official website, and news outlets. If none mention it, it’s fake.

- Use a deepfake checker. Tools like Microsoft’s Video Authenticator or Intel’s FakeCatcher (free web versions available) can scan videos in seconds.

- Teach older family members. People over 65 are far less likely to fall for these scams-but they’re often targeted because they’re less tech-savvy. Show them what to look for.

- Report it. If you see a fake celebrity ad, report it to the platform. Also file a report with your country’s cybercrime unit. The more reports, the faster platforms act.

The Bigger Picture

This isn’t just about money. It’s about trust. We used to believe what we saw and heard. Now, we can’t. MIT’s Center for Cybersecurity predicts that by 2028, 95% of online video will be AI-generated. That means the line between real and fake will vanish unless we build systems to detect it. The FBI’s Assistant Director Jose A. Perez put it plainly: "Educating the public about this emerging threat is key to preventing these scams and minimizing their impact." The truth? No amount of AI can replace human caution. The best defense isn’t a tool-it’s a habit. Slow down. Question. Verify. If it feels too good to be true, it probably is.Can deepfake celebrity scams be completely stopped?

No, not completely. As AI gets better, so do the scams. But they can be drastically reduced. Watermarking laws, real-time verification systems like the Global Deepfake Registry, and public awareness are making it harder for scammers to succeed. The goal isn’t perfection-it’s making fraud too risky and too slow to be profitable.

Are only rich people targeted by these scams?

No. Scammers target everyone. They don’t care if you have $10 or $10,000. A small amount from thousands of people adds up fast. In India, victims lost an average of ₹34,500 ($415)-not life-changing for some, but devastating for others. The goal is volume, not value.

Why do deepfake videos look so real now?

Because AI models have been trained on millions of real videos of celebrities. They learn how someone moves, speaks, blinks, and even pauses. Modern tools like GANs and diffusion models can generate frames so smooth they fool the human eye. What used to take days now takes minutes-and the quality keeps improving.

Can I report a deepfake scam if I didn’t lose money?

Yes, and you should. Reporting a fake video-even if you didn’t click it-helps platforms remove it faster and builds data for law enforcement. Many scams are caught because someone reported them before they could harm others.

Are there free tools to check if a video is a deepfake?

Yes. Microsoft’s Video Authenticator has a free web version. Intel’s FakeCatcher also offers a free browser extension. Both let you upload a video and get a detection score. They’re not perfect, but they’re better than guessing.

Why is India hit so hard by these scams?

India has a huge population, massive social media use, and a deep cultural connection to celebrities. Bollywood stars are treated like gods. When a fake video of Shah Rukh Khan says "invest here," people believe it. Combine that with uneven digital literacy and rapid internet growth, and you get the perfect storm for fraud.

Do celebrities ever profit from these scams?

No. Celebrities are victims too. They don’t profit-they’re sued, their reputation is damaged, and they have to issue public statements denying involvement. Many are now working with tech firms to create official biometric signatures to fight impersonation.

What should I do if I’ve already sent money to a deepfake scam?

Act fast. Contact your bank or payment provider immediately-some can reverse transactions if done within 24 hours. File a report with your local cybercrime unit. Save the video, screenshots, and any messages. Don’t delete anything. Even if you can’t get your money back, your report helps others avoid the same trap.

sonny dirgantara

November 20, 2025 AT 17:15bro i just saw a taylor swift video on tiktok saying to send crypto for free money and i almost did it lmao

Jamie Roman

November 21, 2025 AT 19:13It’s wild how much we’ve outsourced our critical thinking to algorithms and influencers. We used to check a newspaper or call a friend before trusting something-now we just watch a 15-second clip and hand over our bank info. The real tragedy isn’t the deepfakes themselves, it’s that we’ve built a culture where emotional resonance overrides verification. I’ve shown my parents how to spot the blinking glitches, and honestly, it’s the only thing that’s kept them from getting scammed. The tech’s advancing faster than our brains can adapt, and until we teach people to pause before they react, this is just going to get worse. It’s not about being tech-savvy-it’s about being human-savvy.

Salomi Cummingham

November 22, 2025 AT 23:58Oh my god. I just watched a video of Alia Bhatt on WhatsApp telling people to invest in a ‘new Bollywood NFT’-and my aunt sent ₹15,000. I screamed. I cried. I sent her 17 voice notes explaining blinking patterns. She still says ‘but it sounded just like her!’ I don’t know how to fix this. How do you untrust someone you’ve loved since childhood? This isn’t fraud-it’s emotional terrorism. I’m terrified for my mom, my cousins, everyone who grew up idolizing stars like gods. We need more than tools-we need therapy for digital grief.

Johnathan Rhyne

November 23, 2025 AT 23:22Let’s be real-this article is a masterpiece of fearmongering with a side of grammatical overkill. ‘Hyper-realistic videos’? Newsflash: they’re still glitchy. ‘Signature laugh’? No one has a ‘signature laugh’ except maybe Jerry Seinfeld. And don’t even get me started on ‘AI-generated celebrity content’-that’s just what happens when you have a camera and a YouTube channel. Also, ‘global deepfake registry’? Sounds like a Silicon Valley startup’s pitch deck. The real issue? People are lazy. Not the tech. Not the AI. PEOPLE. Stop blaming the machine and start blaming the meatbag clicking the link.

Jawaharlal Thota

November 24, 2025 AT 23:12As someone from India, I’ve seen this firsthand. My cousin’s friend sent a video of Shah Rukh Khan saying he’s giving away free iPhones if you download a ‘secure app.’ She did. Lost ₹28,000. The worst part? She didn’t even know how to check if the account was verified. We need community workshops-village halls, local schools, temple gatherings. Not just government apps or fancy blockchain registries. People trust their neighbors more than their phones. If we train aunties and uncles to spot the unnatural pauses, the mismatched lip sync, the weird shadows on the forehead, we can stop this before it spreads. It’s not about tech-it’s about teaching dignity in skepticism. We’re not stupid-we’re just not taught how to question what we’re shown.

Lauren Saunders

November 25, 2025 AT 12:22Ugh. Another ‘deepfake panic’ article written by someone who thinks ‘glitchy blinking’ is a diagnostic tool. This isn’t a crisis-it’s a marketing opportunity for cybersecurity firms. Microsoft’s ‘Video Authenticator’? It’s a beta tool with 98.7% accuracy in lab conditions. In the wild? It fails constantly. And the ‘Global Deepfake Registry’? A blockchain solution to a social problem. Brilliant. Meanwhile, the real issue-media literacy-is being ignored because it’s not monetizable. Also, why are we still talking about Taylor Swift? She’s not even the most impersonated celebrity in the U.S. anymore. It’s now Oprah. And no, I’m not joking. The data’s out there. You just don’t want to see it because it doesn’t fit your narrative.

Andrew Nashaat

November 26, 2025 AT 17:49Let’s get one thing straight: if you click a link that says ‘Taylor Swift is giving you $10,000,’ you deserve to lose your money. Not because it’s a deepfake-because you’re an idiot. You didn’t pause. You didn’t think. You didn’t even Google it. You just saw a pretty face and your dopamine fired. And now you’re crying about ‘trust’? Please. The real crime is not the AI-it’s the fact that you think your emotional attachment to a celebrity overrides basic logic. Also, ‘unrealistic blinking’? That’s not a flaw-it’s a feature of bad CGI. If you can’t tell the difference between a real human and a 3D render, maybe don’t use the internet. And stop blaming the system. You’re the system.

Gina Grub

November 27, 2025 AT 09:59Deepfakes aren’t the threat. The threat is the collapse of epistemic authority. We no longer have institutions we trust-no newspapers, no broadcasters, no verified accounts. So we outsource truth to aesthetics: a smile, a tone, a laugh. The deepfake is just the symptom. The disease is the death of skepticism. And now? We’re all just waiting for the next AI-generated Putin to announce World War III. And we’ll believe it because it looks real. We’re not being scammed-we’re being hypnotized. And the worst part? We’re loving it.

Nathan Jimerson

November 29, 2025 AT 03:21I’ve seen a lot of these scams in India, and honestly, it’s heartbreaking. But I also see hope. My younger brother, who’s 19, taught our whole family how to use the Microsoft checker. We now have a rule: no money sent unless three people confirm it’s real. It’s slow. It’s annoying. But we’ve stopped three scams already. The tech isn’t perfect, but people can be. We just need to slow down and talk to each other. That’s the real fix.

Sandy Pan

November 30, 2025 AT 23:00What if the real question isn’t how to detect deepfakes-but how to rebuild trust in a world where perception is programmable? We used to believe in truth because it was consistent, observable, repeatable. Now, truth is a variable. If a video of Taylor Swift can be generated in 12 minutes, what does that mean for our understanding of authenticity? Are we becoming post-truth beings? Not because we’re stupid, but because the environment has changed. The solution isn’t just education-it’s philosophy. We need to teach people not just how to spot fakes, but why believing in something real matters. Otherwise, we’re just building better illusions.

Eric Etienne

December 1, 2025 AT 11:46Why are we even talking about this? Just don’t click links. Done. Problem solved. Stop overcomplicating it with blockchain registries and AI tools. If you’re dumb enough to send money to a random guy on WhatsApp saying ‘Taylor Swift is rich now,’ you’re not a victim-you’re a liability. Just unfollow celebrities. Problem solved.

Dylan Rodriquez

December 3, 2025 AT 11:44I’ve spent the last year teaching my students-ages 14 to 65-how to spot these scams. And you know what? The ones who learn fastest aren’t the tech kids. They’re the grandparents. They’re the ones who remember when a phone call was proof. They don’t trust the video-they trust the process. I tell them: ‘If it feels like a gift, it’s a trap. If it feels like a test, it’s real.’ We don’t need more tools. We need to remember that the most powerful technology is still the human conversation. Show someone. Talk to them. Sit with them. That’s the antidote. Not a watermark. Not a blockchain. A person who cares enough to say, ‘Wait. Let’s check this.’

Amanda Ablan

December 3, 2025 AT 22:31I used to think deepfakes were just a Hollywood problem-until my sister sent me a video of her favorite YouTuber ‘announcing’ a new skincare line. I checked the account. It was a fake. But I didn’t just report it-I sent her a voice note explaining how the lighting looked off on her cheek. She cried and said, ‘I thought she was my friend.’ That hit me. These aren’t just scams. They’re violations of emotional bonds. We need to treat them like that. Not just as fraud, but as trauma. The solution isn’t just tech-it’s compassion. Teach people to feel safe questioning what they love.

Mike Zhong

December 4, 2025 AT 14:43As the author of this post, I want to thank everyone for the thoughtful responses. I didn’t expect this much depth. I’ve been working on digital literacy workshops in rural India, and your comments remind me why this matters. The ‘Global Deepfake Registry’ isn’t perfect-but it’s a start. And the fact that people are sharing stories like yours? That’s the real innovation. Keep talking. Keep checking. Keep being the pause between the scam and the click.